Last Updated on August 7, 2025 by Techcanvass Academy

Table of Contents

What is Power BI Semantic Model?

The Power BI course semantic model, which was earlier called the Power BI shared dataset, is a way to organize data in a structured format. It defines tables, connections between them, calculations, and business rules for creating reports and analyzing data. It serves as a single, central layer for data, making it easier for everyone in an organization to access data in a consistent, fast, and secure way. This helps teams use self-service tools to analyze data and make decisions.

Here are some common use cases for creating Power BI semantic models:

- Centralized Data Governance: Ensures consistent definitions, relationships, and business logic across reports, promoting data accuracy and consistency across the organization.

- Simplified Self-Service BI: Offers a simple and clear way for business users to make reports and dashboards without needing technical skills, helping everyone use data on their own.

- Data Security with Row-Level Security (RLS): Lets you control who can see what data by setting up security roles, so users only see the information they are allowed to access.

- Performance Optimization: Makes reports run quicker by organizing data better, improving connections between data, and summarizing data in advance, which helps when working with big amounts of information.

Different connection types in Power BI semantic model

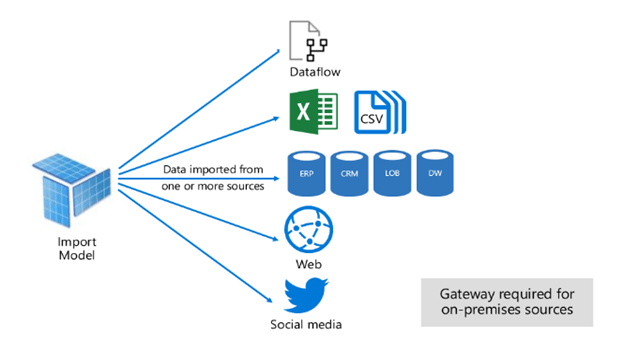

1. Import Mode

Description: Import mode loads data into Power BI Semantic Model’s in-memory storage, enabling high-speed analytics and complex calculations. Once imported, reports rely on this cached data rather than querying the source system.

Use Cases:

- High Performance of Reports.

- Small to medium-sized datasets that can fit into memory.

- Scenarios requiring offline access to reports where real-time data is not essential.

- Situations where high performance is critical for complex calculations and transformations.

Best Practices:

- Optimize Data Volume: Import only the data you need by filtering rows and excluding unnecessary columns.

- Use Aggregated Data: Where possible, summarize data at the source to minimize the size of the imported dataset.

- Schedule Data Refresh: Set up a data refresh schedule that aligns with your reporting needs to keep data up-to-date.

- Optimize Transformations: Perform data cleaning and transformations in Power Query to reduce the load on the Power BI Semantic Model. Use measures instead of calculated columns.

- Monitor Dataset Size: Keep the dataset size manageable to avoid memory and performance issues.

Limitations:

- Large datasets may exceed memory capacity and degrade performance.

- Refreshes can be time-consuming for large datasets.

- Does not support real-time data updates.

Import Mode Microsoft Documentation

Power BI: Introduction, Key Features, and Importance

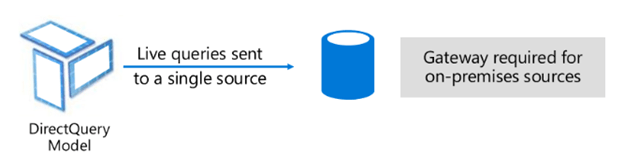

2. Direct Query Mode

Description: Direct Query mode queries the data source in real-time without storing the data in Power BI Semantic Model’s memory. This method is useful for scenarios requiring the most current data.

Use Cases:

- Real-time or near-real-time reporting requirements where up-to-date data is essential.

- Large datasets that cannot fit into Power BI’s memory or where data changes frequently.

- Scenarios where data resides in a secure location and cannot be imported for compliance reasons.

Best Practices:

- Optimize Queries at the Source: Ensure the source database is indexed and optimized for query performance.

- Limit Visuals: Minimize the number of visuals per report page to reduce the number of queries sent to the source.

- Reduce Complex Calculations: Avoid complex measures or calculated columns in Power BI and perform them at the source.

- Test Performance: Regularly test query performance to ensure acceptable response times.

- Use Cached Queries: Leverage Power BI’s query caching feature to enhance performance where possible.

Limitations:

- Performance depends heavily on the source system’s capabilities.

- High latency for complex queries or large datasets.

- Limited functionality for calculated tables and relationships compared to Import mode.

- May overload the source system if not optimized.

Direct Query Microsoft Documentation

Power BI: Introduction, Key Features, and Importance

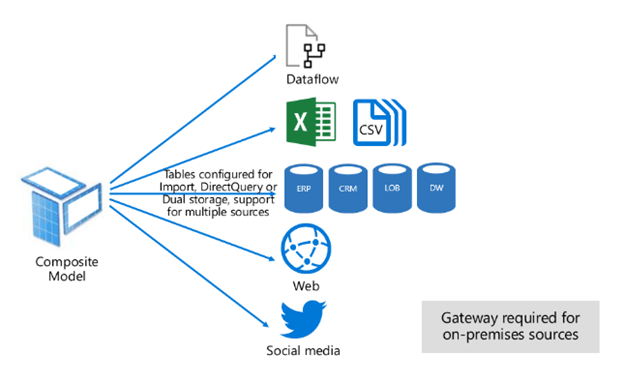

3. Composite Model

Description: Composite Models allow the use of both Import and DirectQuery modes in a single report. This enables combining static historical data with real-time data in a flexible and efficient way within the Power BI Semantic Model.

Use Cases:

- Scenarios requiring both historical data (via Import) and real-time data (via DirectQuery).

- Datasets combining multiple data sources with mixed performance and size requirements.

- Use cases where certain tables need frequent updates while others remain static.

Best Practices:

- Separate High-Performance Data: Use Import mode for frequently accessed, smaller datasets and DirectQuery for large or real-time data.

- Use Relationships Judiciously: Clearly define relationships between tables to avoid ambiguous joins.

- Optimize for Performance: Ensure that DirectQuery tables are optimized to handle real-time queries efficiently.

- Document the Model: Clearly document which tables use Import and which use DirectQuery for transparency within the Power BI Semantic Model.

Limitations:

- Increased complexity in managing relationships between Import and DirectQuery tables.

- Performance bottlenecks may arise if DirectQuery tables are not optimized.

- Some limitations in feature compatibility across the two modes.

Composite Model Microsoft Documentation

Power BI: Introduction, Key Features, and Importance

Conclusion

Choosing the right connection type and following best practices for each ensures efficient data handling and report performance in Power BI course. Whether using Import for high-speed analytics, DirectQuery for real-time data, Live Connections for enterprise models, or Composite Models for flexibility, adhering to these guidelines will help you maximize the value of your Power BI Semantic Model projects.

Frequenty Asked Questions on Power BI Semantic Model

Q. What is a Power BI Semantic Model?

A Power BI semantic model is a centralized, organized data layer that defines tables, relationships, and business rules for analysis. It ensures everyone in your organization works with a single, consistent, and secure source of truth for reporting and self-service analytics.

Q. What are the different connection modes for a Power BI Semantic Model?

There are three primary connection modes: Import, which loads data into Power BI’s memory for fast performance; DirectQuery, which queries the source system in real-time; and a Composite model, which combines both methods for flexibility.

Q. When should I use Import mode in Power BI?

You should use Import mode when you have small to medium-sized datasets and require high-speed performance for complex calculations. It is the best option for scenarios where you need fast interactive reports and don’t require real-time data updates.

Q. What are the benefits of using DirectQuery mode?

DirectQuery mode is ideal for very large datasets and situations that require real-time or near-real-time data. It doesn’t store data in Power BI, which is crucial for compliance or when data needs to remain in its secure source location.

Q. What is a Composite Model in Power BI?

A Composite model is a flexible connection mode that combines both Import and DirectQuery in a single report. It allows you to leverage the speed of imported data for historical analysis while also using real-time data from the source system for up-to-the-minute insights.

Q. Does a Power BI Semantic Model help with data security?

Yes. By creating a centralized semantic model, you can manage security roles and permissions in one place. This ensures that users only have access to the data they are authorized to see, promoting data governance and compliance.